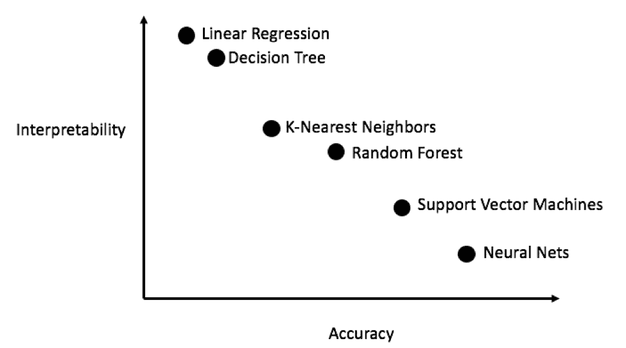

The field of AI is experiencing many rapid developments, increasing performance and architectural developments. But through all of it, models remain predominantly black boxes, where quantitative statements can be made about how they process input into outputs, with less certainty on the exact mechanisms that cause specific outputs to be produced. Interpretability and Alignment are two sub-fields of Machine learning that seek to increase our knowledge of the internal mechanism by which specific models achieve their results.

Interpretability

concerns itself with the analysis of existing model parameters, such as analysis of the way in which state of the art Chain of Thought models perform their reasoning. Such as in Dutta et. al.. The methods by which this is achieved are varied, ranging from interesting experiments such as the ones produced by Google's Deep Dream, to mathematically rigorous analysis of Sparse Autoencoders.

As im writing this, an exciting new development are Skip Transcoders, a topic on which i hope to be able to write more soon.

Aligment

concerns itself with the training goals of a model influence the model's final state. In the extreme, the field concerns itself with evaluation of high-complexity goals and how we could transfer those goals to a sufficiently advanced model in some possible future (and the many pitfalls of this endeavour, e.g. Mesa-optimization in Hubinger et. al.. However, there are also more immediately relevant aspects of the field, such as the management and consequences of distributional shift in current sate of the art models.

Community Discussions

To interact with me on these topics, I am often found in the following community spaces:

- EleutherAI: A discord community of researchers of all fields of AI, including Interpretability, Aligment and more. They have released an Llm, gpt-neo based on a multi-modal dataset. And are host to constant discussion of all the above topics.

- Stampy.ai: A discord community focused on the topic of Alignment, with the goal of creating a large repository on concisely explained information on the field, including a chatbot trained for the specific cause of answering questions on the subject. After setting up the early projects mediawiki infrastructure, I have scaled back by involvement with the current development, but continue to follow the project.